Introduction: A Major Leap Forward for AI Developers

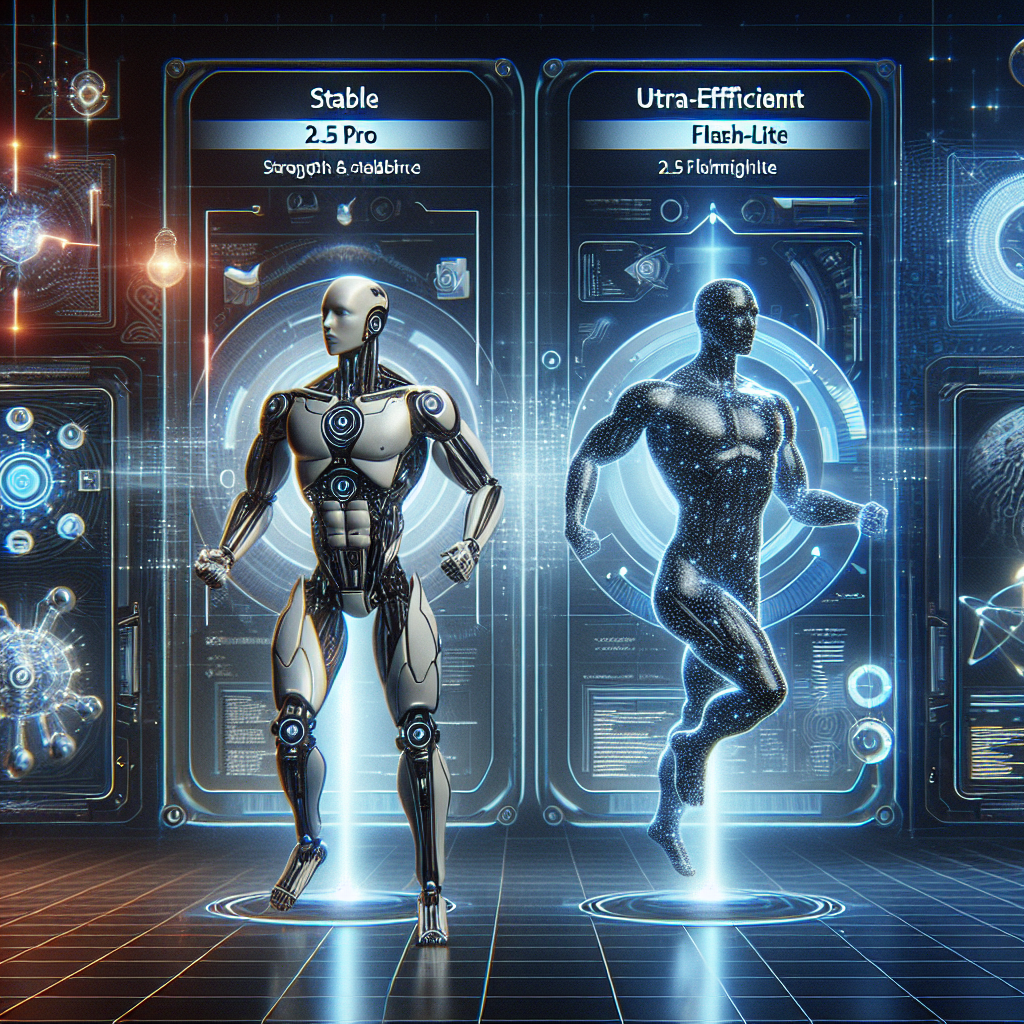

Google has once again pushed the boundaries of artificial intelligence with the official release of its most advanced Gemini models. The tech giant recently announced the stable release of Gemini 2.5 Pro and introduced an ultra-efficient lightweight model called Gemini 2.5 Flash Lite. With these updates, Google aims to address a broader range of development needs—from high-performance enterprise tasks to nimble edge-computing use cases—solidifying Gemini as a versatile AI family that can scale with demand.

What’s New in Gemini 2.5 Pro?

Stability and Developer Readiness

Google confirmed that Gemini 2.5 Pro is now fully stable and ready for developer integration. This new status means that creators can confidently build AI-powered products without encountering frequent model regressions or instability issues.

Performance Improvements

Gemini 2.5 Pro leverages Google’s latest training techniques and infrastructure at scale. These enhancements translate into improved reasoning, more accurate retrieval-augmented generation (RAG), and better multi-turn conversation handling—all delivered with higher reliability and efficiency.

Enterprise-Ready AI

Designed for developers building AI applications at scale, Gemini 2.5 Pro is ideal for implementing robust business solutions in sectors like healthcare, finance, education, and more. Its long-context capabilities and improved latency support make it especially attractive for companies building GenAI workflows and integrations into existing enterprise tools.

Introducing Gemini 2.5 Flash Lite: Speed and Efficiency in One

Designed for Rapid Response

Gemini 2.5 Flash Lite is Google’s most efficient Gemini variant yet. Built for use cases that require real-time responsiveness and operate under strict computational constraints, this model delivers high performance in lightweight deployments.

Perfect Fit for Mobile and Edge Devices

Unlike Pro-tier models that typically require robust cloud infrastructure, Flash Lite is optimized for low-power environments such as mobile devices and edge computing platforms. This makes it ideal for AI assistants, smart appliances, wearables, and other compact smart devices.

Cost-Effective AI for Developers

Because of its efficiency-first architecture, Flash Lite drastically reduces inference costs. Developers can now build responsive and affordable AI-powered features without sacrificing too much in terms of quality or reliability.

Gemini AI Family: A Unified Ecosystem

Google’s Gemini AI offerings now include a tiered ecosystem designed to fit a wide spectrum of needs:

- Gemini 2.5 Pro: High-performance model optimized for enterprise and developer platforms, offering long context understanding and improved accuracy.

- Gemini 2.5 Flash: A lightweight, fast model introduced earlier for cost-conscious applications.

- Gemini 2.5 Flash Lite: The newest ultra-efficient model suitable for edge devices and mobile apps.

This tiered approach allows developers to select the best-fit model based on user context, latency demands, and hardware availability.

Seamless API Access via Gemini Developer Platform

As part of its commitment to democratizing AI, Google has integrated these models with its Gemini API, available through Vertex AI and the Google AI Studio. This enables developers to easily switch between models depending on their performance and cost requirements with minimal friction.

Competitive Edge: How Gemini Stacks Up

Context Handling and Reasoning Depth

With Gemini 2.5 Pro, Google has significantly narrowed the gap between its models and OpenAI’s offerings. Developers report better handling of longer documents and more nuanced dialogue flow, particularly in RAG scenarios—a critical feature for knowledge-intensive tasks.

Environmental Efficiency

By scaling performance relative to computational needs in Flash and Flash Lite variants, the Gemini family contributes toward more eco-conscious AI development. This reflects a growing trend across the industry focused on sustainable AI without compromising quality.

Multilingual and Multimodal Capabilities

Google’s Gemini family continues to push advancements not just in text but in multimodal capacities—understanding images, audio, and video. Support for multiple languages ensures global developers and users can benefit uniformly from Gemini capabilities.

Looking Ahead: What This Means for the Future of AI

Google’s latest Gemini update signals a pivotal shift in how AI will be deployed across industries. By offering a stable Pro version alongside lightweight models like Flash Lite, Google provides developers with the flexibility to innovate faster and more affordably.

These developments open opportunities for AI integration into a multitude of applications, such as:

- AI copilots in productivity tools

- Medical and legal document summarization

- Interactive customer service bots

- Low-latency AR/VR applications

Final Thoughts

The updated Gemini AI suite underscores Google’s strategic intent to lead the AI developer ecosystem by delivering both computational power and adaptable solutions. Whether you’re an enterprise architect planning large-scale data processing or an indie developer creating mobile-first AI tools, the refined Gemini 2.5 family offers the tools you need to build, scale, and innovate.

Stay tuned as more updates are expected throughout the year, potentially including even more specialized models and improved integrations across Google’s ecosystem. For now, Gemini 2.5 Pro and Flash Lite are ready to usher in a new age of scalable, efficient AI development.

Leave a Reply