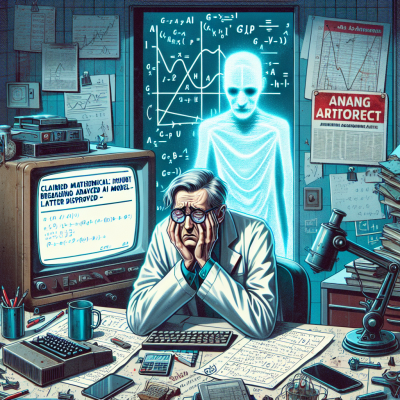

OpenAI’s Claimed Math Breakthrough Sparks Backlash

In the fast-paced world of AI research, bold claims often attract global attention—as well as scrutiny. That’s exactly what happened when a leading researcher from OpenAI announced a supposed mathematical achievement involving GPT-5. Widely shared on social media, the claim suggested that GPT-5 had passed a major milestone in mathematical reasoning. However, things quickly unraveled when members of the AI community, including prominent voices like DeepMind CEO Demis Hassabis, criticized the announcement as premature and lacking proper validation.

The Announcement That Sparked the Controversy

In early June 2025, an OpenAI research scientist took to X (formerly Twitter) to share news of what was described as a game-changing development in GPT-5’s ability to handle rigorous mathematics. The AI, they claimed, had demonstrated performance akin to human-level understanding in solving complex math problems.

The tweet gained immediate traction. Industry enthusiasts, journalists, and tech influencers alike picked up the buzz, amplifying excitement around what could be a significant leap in AI capabilities—particularly in an area where large language models (LLMs) have historically struggled.

A Quick Retraction Amid Mounting Scrutiny

However, within hours of the initial announcement, criticism started pouring in. One of the most notable voices was Demis Hassabis, the CEO of DeepMind. On X, Hassabis pushed back on the researcher’s claims, calling the communication method “sloppy” and urging for more scientific rigor in how such breakthroughs are shared with the public.

Why Hassabis’ Criticism Matters

Hassabis is a respected figure in the AI space, known for leading groundbreaking initiatives such as AlphaGo and AlphaFold. His public dissent added weight to the growing skepticism. Others in the academic and AI communities echoed his concerns, questioning the lack of peer-reviewed evidence or supporting documentation.

Under pressure, the OpenAI researcher retracted the claim, clarifying that the results had been misinterpreted and did not represent a formal research breakthrough. The retraction, however, came too late to avoid reputational damage, with many calling into question OpenAI’s internal review process and external communications protocol.

The Gap Between AI Perception and Reality

LLMs like GPT-5 have shown striking progress, but mathematics remains a significant challenge. Language models can mimic problem-solving by reproducing known patterns, but true mathematical reasoning requires logic, precision, and an ability to understand concepts beyond linguistics.

Key limitations of LLMs in math reasoning include:

- Contextual ambiguity: Math problems often hinge on exact definitions, which LLMs can misinterpret due to contextual variability.

- Step-by-step reasoning: Even when trained on structured data, LLMs may skip essential logical steps.

- Lack of verification: Unlike symbolic solvers or formal proof checkers, LLMs don’t inherently verify correctness.

The Responsibility of AI Communication

This incident highlights a growing concern: how AI research is being communicated to the public. Public trust and understanding of AI developments can be skewed by overstated claims, especially when they come from reputable institutions like OpenAI.

Key takeaways for AI communication:

- Transparency: Claimed breakthroughs should be backed by peer-reviewed evidence or at least clear documentation of methodology and results.

- Accountability: Researchers need to align with their organizations to ensure consistent messaging and avoid the perception of a “hype-first” approach.

- Scientific rigor: Announcements of major AI milestones must prioritize precision over virality.

What This Means for GPT-5 and OpenAI

Despite this misstep, GPT-5 remains at the leading edge of AI system development. The model is expected to improve upon GPT-4’s capabilities in a broad range of tasks, including language comprehension, coding, and even problem-solving. Yet, this controversy is a reminder that even top-grade models are not immune to scientific overreach—or the backlash that follows.

For OpenAI, recovering from the incident may require more careful stewardship of public statements. This includes building stronger internal guidelines and possibly rethinking how teams engage with the community around early-stage experiments.

The AI Community Responds—And Reflects

In the wake of the incident, many AI researchers have taken the opportunity to reflect on the broader impact of premature claims. Several community contributors have emphasized the importance of independent validation and reproducibility, suggesting that future claims should be accompanied by open-sourced benchmarks or published methodology.

Amid the backlash, some have also called for platforms like X to be used more responsibly by researchers, citing the need for professional moderation even in informal communication channels.

Looking Ahead: A Lesson in Scientific Integrity

The GPT-5 math scandal is not the first time an AI claim has caused a stir, and it likely won’t be the last. But it serves as a critical case study: in an industry driven by innovation and competition, integrity and transparency must stay at the core.

This event underscores the need for AI companies—especially those with public influence—to communicate scientific progress with clarity, humility, and a rigorous emphasis on truth. While the promise of AGI and advanced problem-solving remains a valid goal, rushing toward headlines without substantiated proof risks compromising both credibility and progress.

Final Thoughts

The excitement surrounding AI’s potential should not replace the discipline required to achieve it. While OpenAI continues to lead in cutting-edge research, this incident is a potent reminder that with great influence comes an even greater responsibility—to the scientific community, to the public, and to the truth.

Leave a Reply